System Verification Test Plan and Its Major Areas of Concern

Process of Verification & Validation of software systems is quite tedious but essential. Especially for mission-critical systems involving risk to some state-of-the-art-technology or risk to human life or risk to large size investments, robust system-level testing is deployed to ensure the correct operation of the system.

This article, focus on the verification part that is concerned with the testing of the system functions against the conditions specified in the requirements document being essential in any critical system.

System Verification Test or SVT plan serve many important functions for the software testing engineer and becomes a road map for him/her to follow and a yardstick to measure progress against. It also provides a vehicle for others to his/her ideas and offer additional suggestions.

SVT is the point where the entire package comes together for the first time, with all components working together to deliver the project�s intended purpose. It�s also the point where we move beyond the lower-level, more granular tests of FVT (Function Verification Testing), and into tests that take a more global view of the product or system. SVT is also the land of load and

stress. When the code under test eventually finds itself in a real production environment, heavy loads will be a way of life. That means that with few exceptions, no SVT scenario should be considered complete until it has been run successfully against a backdrop of load/stress.The system tester is also likely the first person to interact with the product, as a customer will. Once the SVT team earns a good reputation, the development team may begin to view them as their first customer.

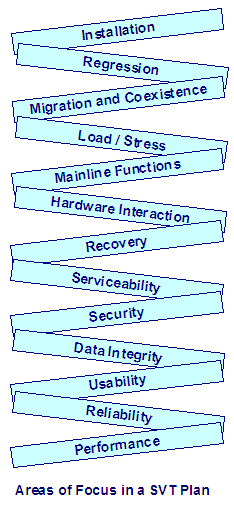

Areas of concern addressed in a SVT plan:

The SVT plan must ensure that every requirement specified in the requirement document must be testable, and all these requirements must be tested. Few such aspects are:

1) Installation aspects:

It is quite obvious that before you can test new software you must install it. But installation testing goes beyond a single install. You will need scenarios to experiment with multiple options and flows across a variety of hardware environments and configurations.

In fact, this testing could be done during FVT rather than SVT if the FVT team has access to the right mixture of system configurations. Regardless of when the testing is done, after each installation it�s important to exercise the software to see if it�s truly whole and operable.

Uninstall scenarios should be covered as well, as should the ability to upgrade a prior release to the new one, if supported.

Your customer�s first impression of the software will come at the time of installation, hence an intelligent software testing engineer ensures that it is a good one.

2) Regression testing aspects:

The idea behind regression testing is quite simple to see if things that used to work still do. Production users insist on this kind of continuity. Testing is required because whenever new function is introduced into a product, it almost inevitably intersects and interacts with existing code – and that means it tends to break things. This is what good software testing engineers refer to as a new release of software stimulating the discovery of defects in old code.

Regression testing is best accomplished through a collection of test cases whose execution is automated through a tool. These test cases come from past tests, of course. It is difficult to imagine something more effective at discovering if an old function still works than the test cases that were used to expose that function in the first place.

3) Migration and Coexistence aspects:

The intent of migration testing is to see if a customer will be able to transition smoothly from a prior version of the software to the new one. Which prior release? Ideally, all currently supported prior releases, though sometimes that isn�t practical.

If we call the new release under test “n”, then at a minimum you�ll need to test migration from release n-1 to n. It can also be useful to test migration from releases n-2 and n-3 as well.

This gets especially interesting if the user will be allowed to migrate from, say, the n-3 release directly to the n release, without first moving through the interim releases, since the potential for trouble in this case is magnified. However, the longer n-1 has been available and the more pervasive its use, the less important it becomes to test older versions.

4) Load or Stress Testing aspects:

Load / stress is the foundation upon which virtually all of SVT for multithreaded software is based, either as the primary focus or as a backdrop against which other tests are executed.

This testing goes beyond any stress levels achieved during initial regression testing, reaching to the ultimate levels targeted for the project.

There are two angles to view the load testing or stress testing;

a) First angle is the deeper one, which primarily aims for throughput-related targets. You seek timing and serialization bugs (also called race conditions) that only extreme levels of stress will expose.

Why does stress have this effect? If you�ve ever tried running anything on a busy server, you know that saturating the system with work slows everything down. Tasks competing for precious resources must wait in line longer than normal. This widens timing windows around multithreaded code that hasn�t been correctly serialized, allowing bugs to reveal themselves.

b) Second one is the wider angle of load / stress testing addresses maximizing volumes and resource allocations. It is a form of limits testing done on a system-wide scale while the program under test is processing real work.

5) Mainline Function aspects:

This is similar to what is done in FVT, in that you are targeting new and changed functionality. But rather than narrowly focusing on function at the component level, your scope is expanded to view it end to end. Whereas in FVT you would exhaustively exercise every aspect of individual component interfaces, in SVT you should devise scenarios to exhaustively exercise the entire software package�s supported tasks from an end-user perspective. You wouldn�t try every combination of inputs to a system command. Rather, you would use that command to perform an action or, in concert with other commands, enact a chain of actions as a customer would.

You will need to check if complimentary functions work together appropriately. Of critical importance is performing these tests against a backdrop of heavy load/stress, because functions that seem to work fine in a lightly loaded FVT environment often fall apart in a high-stress SVT environment.

6) Hardware Interaction aspects:

This is really a special case of mainline function testing, but because of its tie-in with an expensive hardware resource, it is worthwhile to document separately.

There are really two aspects to this focus area. First, if the software under test has explicit support for a new piece of hardware, as is often the case for operating systems, emulators, and networking tools, then scenarios must be included to test that support.

Second, if the software has any implicit dependencies or assumptions about hardware that it will run on or interact with, then scenarios must be included to put those factors to the test. What do we mean by implicit dependencies or assumptions? For example:

# Timing loops based on processor clock speed

# Network bandwidth or latency dependencies

# Memory availability

# Multitasking on a uniprocessor versus a multiprocessor

# I/O latency

# Reliance on quirks in the implementation of an architecture or protocol

It is a good practice for software testing engineers to test software across a range of available hardware environments to see how it reacts.

7) Recovery aspects:

Recovery testing is just as important in SVT as it is in FVT. However, in SVT the scope broadens from a component view to a full product view. The need to restart cleanly after a crash expands to consider system-wide failures. The impact of clustered systems and environmental failures begins to come into play.

8) Serviceability aspects:

Errors are inevitable. Serviceability support responds to that fact by providing features such as logs, traces, and memory dumps to help debug errors when they arise. Thoroughness counts here.

Customers take a dim view of repeated requests to recreate a problem so that it can be debugged, particularly if that problem causes an outage. They have already suffered once from the defect; they want it fixed before they suffer again. In particular, they expect software to have the capability for first failure data capture (FFDC). This means that at the time of initial failure, the software�s serviceability features are able to capture enough diagnostic data to allow the problem to be debugged. For errors such as wild branches, program interrupts, and unresolved page faults, FFDC is achievable.

System-level testing are aimed to explore serviceability features� ability to achieve FFDC in a heavily loaded environment. Rather than defining specific serviceability scenarios in the test plan for FFDC, the usual approach is “test by use.” This means the software testing engineers use the serviceability features during the course of their work to debug problems that arise on a loaded system, just as is done in production environments. Any weaknesses or deficiencies in those features should then be reported as defects.

9) Security aspects:

Probing for security vulnerabilities during SVT is similar to what is done during FVT, but with a system-level focus. For example, this would be the place to see how your software holds up under a broad range of denial-of-service (DOS) attacks. Such attacks don�t try to gain unauthorized access to your system, but rather to monopolize its resources so that there is no capacity remaining to service others.

Some of these attacks have telltale signatures that enable firewalls and filters to protect against them, but others are indistinguishable from legitimate traffic. At a minimum, your software testing should verify that the software can withstand these onslaughts without crashing. In effect, you can treat DOS attacks as a specialized form of load and stress.

10) Data Integrity aspects:

Certain types of software, such as operating systems and databases, are responsible for protecting user and system data from corruption. For such software, testing to ensure that data integrity is maintained at all times, regardless of external events, is vital.

It is also tricky, since most software assumes that the data it uses is safe, it often won�t even notice corruption until long after the damage is done.

11) Usability aspects:

In a perfect world, end user interfaces of the program would be designed by human factors and graphic arts specialists working hand-in-hand with the developers of the program. They would take great pains to ensure that input screens, messages, and task flows are intuitive and easy to follow. In reality, interfaces are often assembled on a tight schedule by a developer with no special training. The results are predictable.

Thus, it may fall upon the test team to assess how good an interface is. Software testing engineers use two approaches like a) Explicit testing and b) Implicit testing for usability testing .

12) Reliability aspects:

Also known as longevity testing, the focus of this area is to see if the system can continue running significant load/stress for an extended period.

Do small memory leaks occur over several days which eventually chew up so much memory that the application can no longer operate?

Do databases eventually age such that they become fragmented and unusable?

Are there any erroneous timing windows that hit so infrequently that only a lengthy run can surface them?

The only way to answer some of these questions is to try it and see. Reliability runs typically last for several days or weeks. Frequently there are multiple reliability scenarios, each based on a different workload or workload mix. Also, because a piece of software must be quite stable to survive such prolonged activity, these scenarios are usually planned for the end of the system testing cycle.

13) Performance aspects:

A clear distinction should be made between system testing and performance testing, since the goals and methodologies for each are quite different. Explicit performance measurement is not normally a part of SVT. However, some obvious performance issues may arise during system test. If simple actions are taking several minutes to complete, throughput is ridiculously slow, or the system can�t attain decent stress levels, no scientific measurements are required to realize there�s a problem – a few glances at your watch will do the trick.

This is known as wall clock performance measurement. An SVT plan should at least note that wall clock performance issues would be adequately watched and addressed.

Dear Readers !!!!! Validation is the second important part of V&V process. It is the process of software testing to ensure that the software & the entire system behaves correctly while interacting with other systems & components.

I will be publishing a descriptive post on validation very shortly.

Many More Articles on Software Testing Approaches

An expert on R&D, Online Training and Publishing. He is M.Tech. (Honours) and is a part of the STG team since inception.

Thanks to Team softwaretestinggenius.com !!!I have successfully cleared ISTQB foundation level exam with the help of this Site.