How to perform Poor Testing-A Primer for Self Improvement

This article is not aimed at inculcating negativity among the software testing community, rather it is a live pointer to various mistakes poor testers make in the real world scenario. This is better viewed as an opportunity for self-improvement in our software development initiative.

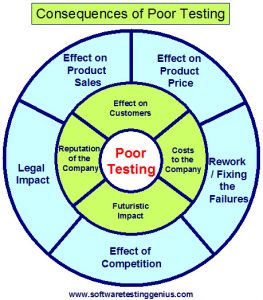

Poor testing leaves an adverse impact on the customer experience of the software product. Such poor impact on the customer leaves big dent on sales of the software product as well as affects the profitability of the company. It can lead to legal implications as well on occasions.

Poor testing can prove to be more expensive in the longer run. Spending lot many hours and considerable amount of money on software testing and missing out many problem areas and non conformances of the software product is the worst part of it. It is a sheer wastage of the organizational resources, and on the other hand it provides a false sense of security to the testing team and the organization.

Important Do’s & Don’ts of Poor Testing:

Don’ts of Poor Testing:

Never do the following if you want

to do a poor testing;

1) Do not Add Stress:

We can not learn much out the software product, by testing it at a low level of vigor. Hence it is essential that the product is tested against most severe levels of stimulus.

2) Do not test beyond the Specified Limits:

Product specifications remain a good point to starting with; however, it is seen on many occasions that the specifications do not capture the total exposure of the stimulus actually applied on to the product in the field. In many cases the product actually passes the testing according to the specifications, but practically fails during actual use in the field. External environments as well as variations in the key product design areas cumulatively add up to produce a software failure in the field.

Understanding the true demands on the product when actually deployed at the clients end, is a real challenging and time consuming task. Capturing the information needs tedious instrumentation for measuring the sample units while also acquiring a variety of example uses of the product with sufficient sample size and variation to provide statistically important understanding, is time consuming. The software testing engineers heavily depend on the standards during the product development stages. Standards undoubtedly remain a starting point, however the percentage of our target customer population represented by the standard can always be questioned. It is very common to experience passing of the product to its specification only to produce failures in the field. Thus testing limited to the specifications is an extremely shortsighted methodology.

3) Do not test with Unusual Combinations of Events:

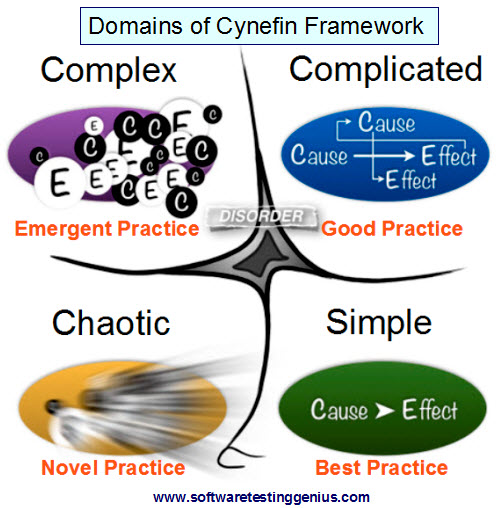

The real world scenario is far more chaotic and complicated compared to the general belief. Software testing limited within typical combinations is extremely unrealistic and shortsighted. Cynefin framework of decision making describes the sequence of various systems falling between simple and chaotic:

# Simple system: Here the relationship between cause and effect directly applicable to all.

The approach used is

1) Sense – See what�s coming in

2) Categorize – Make it fit predetermined categories

3) Respond – Decide what to do

Here best practice can be applied.

# Complicated system: Here the relationship between cause and effect needs some sort of investigation and use of expertise of the application.

The approach used is

1) Sense – See what�s coming in

2) Analyze – Investigate with the help of expert knowledge

3) Respond – Decide what to do

Here good practice can be applied.

# Complex system: Here the relationship between cause and effect can only be judged in advance.

The approach used is to

1) Probe – Provide experimental input

2) Sense – Successes or Failures

3) Respond – Decide what to do next i.e. amplify or reduce

Here emergent practice can be applied.

# Chaotic system: Here we can not find any relationship between cause and effect at the systems level.

The approach used is to

1) Act – Try to stabilize

2) Sense – Successes or Failures

3) Respond – Decide what to do next

Here novel practice can be discovered.

4) Do not check all Inputs and Outputs:

Few project managers habitually say, “If we do our design and development work perfectly, there won�t be any need for the software testing.” Persons with such type of mentality would restrict their testing effort to test only select inputs and outputs of the product. Development work involves several people and coordination of events in addition to having a continuous eye even for the minute details. So long as human beings are involved in the software development, there is a need to test with a greater measure of rigor. Neglecting some of the inputs or outputs means open areas for failures, which will eventually come back from the customer end one-day or the other.

5) Do not follow up:

Finding out a software defect is only the beginning of the testing effort. Once a bug is reported, a follow-up in the form of a corrective action has to be there. Will the defect be corrected?

In case the bug is to be corrected, follow-up testing is absolutely necessary. There had been many instances where a software bug had been reported in the reporting system. Consequently the development team declared the understanding of the bug and created a new part as a result. Believing this the software testing engineer closed the bug report.

Eventually the new parts failed in the field when shipped. The lacuna had been the absence of confirmation through verification that the corrective action had actually corrected the defect. Simply reporting the defect can�t be taken as the end of the verification work. It is important to ensure that the corrective actions have adequately addressed the defects and have not introduced any new defects.

Do’s of Poor Testing:

Always do the following if you want to do a poor testing;

1) Let the designers Create the Test Plan:

Generally conflict of interest arises, whenever test plan creation is entrusted to the developers.

Ideally, the test plan ought to be created by an independent verification and validation team, may it be external or an internal one. It is better to offer the test plan to the designers for a review to eradicate any obvious misinterpretations or misunderstanding. However designers must not be made the creators or the final judges of the test plan. As one of the best practice, the customer may be involved in the process of approval of the base test plan, although while creating rigorous product characterization test plans, customer�s involvement can be avoided.

2) Test Only against the Nominal Input Values:

For instance, one of the software testing groups had been conducting tests for many years according to the nominal values specified by the relevant test standards. However testing around a steady nominal value may not be a realistic situation in the field & many professionals would find no reason to test at this value on the bench. There would be a need to switch to random values around the nominal ones. This approach applies to other environmental values as well where system performance can get affected.

3) Prepare Test Plans that are not consistent with Historical Evidence:

Failure to prepare plans which are very well within your means to achieve an objective can be worse than not planning. Overlooking the past is another area vulnerable to testing failure. Preparing a schedule which does not take care of the performance and the level of expertise of the organization provides a false hope. Hence all schedules should be realistic and achievable. Every time the team leaders try to crash the testing schedule, there comes a risk of mistakes by fatigued software testing engineers.

4) Provide a comfortable air-conditioned Environment:

Even when the software product is going to be used in an air-conditioned environment, the testing need not be done in the similar environment just to meet the customer’s expectations. It is quite difficult to measure & quantify the product�s exposure to the variety of stimuli it is expected to face during the end use by the customer.

5) Forget About Extreme Situations:

Generally the software testing engineers use extreme situations to create product failures. Sometimes extreme environments are created to cause product failure more rapidly compared to using nominal environmental limits. Even if adequate cautions are given to the product user to avoid extreme environments, the testing shall still be done in the adverse conditions so as to have an idea of the conditions the product is going to fail.

6) Ignore the FMEA or Fault Tree:

Many software development organizations are ignorant of the great benefits of using the tools like FMEA or fault trees, hence don�t use them. While many organizations sparingly use such tools at the fag end of the development that is after the completion of the design or when the product about to go into production. So ignorance or inadequate use of these tools lands up into same outcome � luck � product may or may not succeed during the end use. These tools & techniques provide great help in making constructive changes in the product design for timely improvements.

Concluding tips to avoid Poor Testing:

1) Evaluate your metrics: Software testing metrics are generally used by many organizations to monitor the projects and to decide the shipment readiness of their products. The test managers must ensure the quality of the metrics. The metrics must not be vague & misleading.

2) Maintain an effective tester�s toolbox: This could have several test case design techniques like classification trees, design of experiments-based methods, combination testing & pair-wise testing.

3) Avoid chaos by over adherence to processes: Software testing engineers need to exercise restraint on excessive mechanical execution of activities. Procedures must be such that test results are effectively generated.

4) Maintain Self-maintaining Tests: To take care of fast obsolescence of test scripts create your own system in which a “model” is generated every time changes are made by the developers.

5) Culture of concurrent development teams: To facilitate software testing engineers and programmers work together with a whole-team approach and bring their own perspectives and unique strengths for the success of the project.

Many More Articles on Software Testing Approaches

An expert on R&D, Online Training and Publishing. He is M.Tech. (Honours) and is a part of the STG team since inception.

Nice article…