Measurement of Quality of the Testing and Test Automation Process

Although test measurement profiles differ from organization to organization; yet software testing experts emphasize that the quality of testing and test automation process must be measured in some way or the other. They declare that anything can be made measurable in a way that is better than not measuring it at all.

Through measurement only it is possible to monitor and control the testing process. As a result software testing managers are able to optimize it to suit the situation suiting the organization. It is important that measurements are realistic: measure what is achievable, addresses the organizational objectives & most important aspect is that it must be useful. For a measurement to be useful, it is related to the objectives & its all efforts are focussed to make the measures easy.

What are the Quality Attributes of Test Automation that should be measured?

While listing down the quality attributes for measurement, it is important for us to know your objectives against which we intend to measure these attributes. As one of the good practice we must not try to use all possible attributes that come to our mind. Rather it is better to choose three or four attributes that would give us the most useful information as to whether we are achieving our objectives or not.

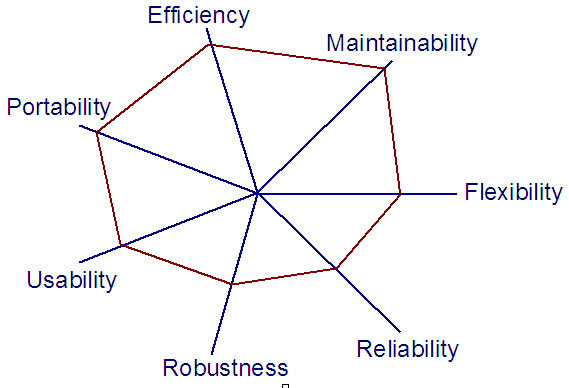

Seven prime attributes of test automation are:

1) Efficiency

2) Maintainability

3) Reliability

4) Flexibility

5) Usability

6) Robustness

7) Portability

Kiviat diagram of a typical automation profile is shown in the following figure. Depending upon the objectives of the organization, each attribute is scored along the spokes of the diagram, with the better values being more away from the center.

All these attributes along with their scales of measurement are described below.

1) Efficiency:

Efficiency is related to cost. Efficiency is generally one of the main reasons why people want to automate testing, to be able to perform their tests with less effort, or in a shorter time. Early automation efforts are likely to be less efficient than manual testing, but a mature automation profile should be more efficient than manual testing.

In order to measure test automation efficiency, we need to know about the cost of the automation effort. The cost of automating includes the salary and overhead cost of the people and the time and effort they spend in various activities related to test automation. It also includes the cost of hardware, software, and other physical resources needed to perform the automated tests.

The simplest measure is the cost of automated testing as a whole, which can then be monitored over time. Automated testing is composed of a number of different activities, which could be measured separately if more detail were required. Some activities, such as the design of new tests, would incur a similar cost whether the tests were executed manually or automatically. Other activities, such as test execution, will have a significantly different cost.

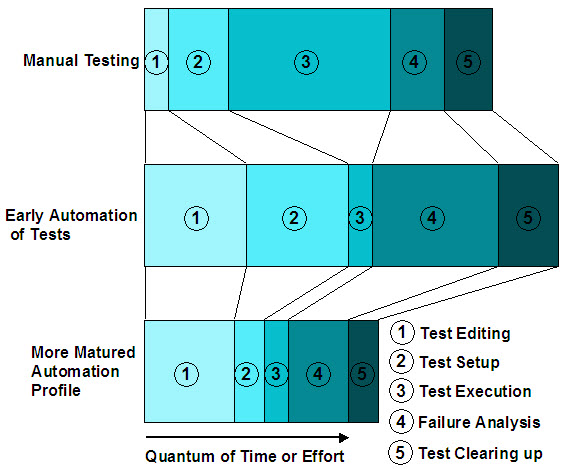

Following figure outlines how some aspects of testing may change when software testing managers introduce automation in stages.

Test execution consumes most of the effort In manual testing. If those tests are automated, the time taken for test execution should go down dramatically, but the cost of other activities may increase.

The second portion of the figure explains as to what happens when a set of test cases is automated. The time taken for test execution is significantly less for the automated tests, compared to the manual testing. However, maintaining the tests themselves before they are run on a later version of the software may take more effort for automated tests than for tests performed manually. This is because the manual testers can generally adjust the tests intuitively while they execute them. The necessary changes to automated tests have to be specifically and precisely implemented.

Setting up the environment and test data for automated tests needs very careful planning, which can consume a significant amount of time. If we are testing manually and find that a file is missing, we can get it. However if the tool finds that a file is missing during test execution, the test will fail. Although the automated set-up itself should be very quick, the effort to implement automated set-up will be higher than for manual testing.

The clear-up activities will probably be more extensive with automated testing than for manual testing, mainly due to the larger number of files that are created. The automated clear-up instructions also need to be debugged or subsequent tests may be affected. As with automated set-up, more effort will be needed in the planning.

Analyzing failures often takes significantly longer with automated tests. If we are testing manually, we know exactly where we have been and what has happened before a failure, so it doesn’t take long to find out what is wrong.

Above figure shows how trying to justify savings based only on test execution may be misleading, if the other test activities are not taken into account.

The third portion of the above figure shows what a more matured automation profile can look like. This should be our goal, but we can only say whether or not we have achieved a good profile if we measure it.

Scales of measurement of efficiency can be:

a) Elapsed time (hours) to perform certain tasks;

b) Effort (working hours) to perform certain tasks;

2) Maintainability:

It primarily refers to the ease of updating the testware when software undergoes changes. A highly maintainable automation profile is the one where it is easy to keep the tests in step with the software. It is a known fact that the software will certainly change. For majority of the organizations it is important that the effort of updating the automated tests should not be too high, or the entire test automation effort will be dropped in favor of a cheaper substitute of manual testing.

Scales of measurement of maintainability can be:

a) Average elapsed time in hours or effort in working hours per test to update the tests;

b) How often software changes take place.

We can measure the maintainability in more detail by considering different types of software changes like:

# Changes to the screen layout or the user interface.

# Changes to the business rules. It does not affect all the aspects of a test. e.g. a change in the interest rate as a business rule can affect the outputs without having any effect on the inputs.

# Changes to the format of a file or database.

# Changes to the content of a report.

# Changes in a communication protocol.

# Simple changes in the functionality. Minor change in the functionality should not have a major effect on the existing automated tests.|

# Major changes in the functionality. Major change in the software would certainly have a significant effect on the existing automated tests.

Thus it is important to make the tests easier to update for the most frequent changes in the software.

3) Reliability:

The reliability of an automated testing profile is related to its ability to give accurate and repeatable results.

Scales of measurement of reliability can be:

a) Percentages of tests that fail due to defects in the tests i.e. either test design defects or test automation defects;

b) Number of additional test cycles or iterations required because of defects in the tests;

c) Number of false negatives, where the test is recorded as failed but the actual outcome is correct. This could be due to incorrect expected results e.g. number of false positives, where the test is recorded as passed, but is later found to contain a defect not picked up by the test. This could be due to incorrect expected results, incorrect comparison, or the comparison incorrectly specified or implemented.

4) Flexibility:

The flexibility of an automated testing profile is related to the extent to which it allows us to work with different subsets of tests. For example, a more flexible profile will allow test cases to be combined in many different ways for different test objectives.

Scales of measurement of flexibility can be:

a) Time to test emergencies fix on an old release. e.g. a profile that allows this in two hours is more flexible than one that takes two days;

b) Time taken to identify a set of test cases for a specific purpose, e.g. all the tests that failed last time;

c) Number of selection criteria that can be used to identify a subset of test cases;

d) Time or effort needed to restore a test case that has been archived.

5) Usability:

There may be different usability requirements of an automated testing profile for different types of users, testers, or test automation engineers. E.g. a profile may be designed for use by software engineers with certain technical skills, and may need to be easy for those engineers to use. That same profile may not be usable by non-technical people. So usability must be considered in terms of the intended users of the regime.

Scales of measurement of usability can be:

a) Time taken to add, say twenty new test cases of a similar type to an existing profile;

b) Time or effort required to ascertain the results of running a set of automated test cases, e.g. how many passed and failed;

c) Training time needed for a user of the automation profile to become confident and productive. This may apply in different ways to the testers who design or execute the tests and to the test automation engineers who build the tests;

d) Time or effort needed to discount defects that are of no interest for a particular set of automated tests, e.g. a minor defect which is not going to be fixed until some future release;

e) How well the users like the profile, their perceptions of how easy it is for them to use it. This could be measured by a survey of the test automation users.

6) Robustness:

It refers to the ability of the testware to cope with unexpected events without tripping up. Robustness of an automated testing profile is how useful the automated tests are for unstable or rapidly changing software. A robust profile is able to cope up with more changes or with a wider variety of changes to the software. It will require few or no changes to the automated tests, and will be able to provide useful information even when there are a lot of defects in the software.

Scales of measurement of robustness can be:

a) Number of tests which fail because of a single software defect. A robust profile will have lesser number of tests failing rather than many tests failing due to the same reason;

b) Frequency of failure of an automated test i.e. failures due to unexpected events,

or the number of automated tests which fail for the same unexpected event,

or the number of automated tests which fail due to unexpected events compared to the number of unique defects found by that set of tests.

An unexpected event can be disconnection of a communication line etc.

c) Mean time to fail i.e. the average elapsed time from the start of a test until an unexpected event causes it to fail;

d) Time taken to investigate the causes of unexpected events that result in the test failure.

7) Portability:

The portability of an automated testing profile is related to its ability to run in different environments.

Scales of measurement of portability can be:

a) Time or effort needed to make a set of automated tests run successfully in a new environment (e.g. different database or management system) or on a new hardware platform;

b) Time or effort needed to make a set of automated tests run using a different test tool;

c) Number of different environments in which the automated tests will run.

Selection of Different Quality Attributes:

Depending upon the objectives of the organizations, the quality attributes are targeted according to their importance. Software testing managers while trying to set these objectives realize the fact that the objectives are not very well understood and may not be directly measurable at all.

1) For organizations working on dynamically changing software:

In case of dynamically changing software with frequent releases on many platforms, the most important attribute will be ease of maintenance of the tests.

When the software runs on different platforms, portability is also very important. In this case, it may not matter too much if the tests are not very reliable or robust, as the time saved in being able to test quickly gives the organization a better edge over its competition.

Occasional manual intervention is also acceptable in the automated tests.

2) For organizations with large number of technical tests:

When the technical tests are quite large in number, and where the software is very stable with minor changes from time to time and runs on just one platform, flexibility, reliability & robustness is of prime importance, whereas system may not demand greater usability, portability & maintainability.

3) For organizations preferring automation to begin with:

Automation profile using only capture replay aspect would be quite usable and reliable for such situations. Such an automation profile may not be too robust, and not flexible, maintainable, or portable in the initial few months of test automation but would not remain a good profile after a few years.

Conclusion:

1) Best way is to identify just 3 or 4 measures, which should be suitable for monitoring our testing, and test automation activities against the most important objectives.

2) Closely monitor these measures for few months & see what can be learnt from them.

3) We should not hesitate to change the things being measured if they are failing to provide some tangible information.

Many More articles on Test Automation Frameworks

An expert on R&D, Online Training and Publishing. He is M.Tech. (Honours) and is a part of the STG team since inception.