ISTQB Agile Tester Extension Exam Theory Study Material Part 12

Have a deep study of this entire question bank containing theory portion with detailed explanation. This study resource is as per the latest syllabus.

Just 1 hr. of time spent in brushing up your knowledge just before the ISTQB Agile Tester Extension Exam shall be of great help in clearing it.

Set of 10 Questions (Q. 111 to 120) with detailed explanation

Q. 111: How Testing Effort is estimated based on the content and risk?

The agile team estimates the testing effort during the release planning.

Reliable estimation of testing effort is necessary for a smooth pace of the work and maintaining a good velocity.

# During iteration planning, user stories are estimated through planning poker in story points.

# Story points give implementation effort.

# Risk level influences the story points.

<<<<<< =================== >>>>>>

Q. 112: What is the process of “Planning Poker” technique?

# Lot of uncertainty and variability remains around the planning of testing effort in agile projects. It’s quite difficult.

# In Scrum, estimating is a team activity. For each story, the whole team participates in the estimation process.

# Planning poker is a common technique for estimating how long the work will take in Agile projects.

# Planning poker (sometimes called Scrum poker) is a consensus-based technique in which the whole team is involved in making estimating faster and more accurate and there is no chance of missing anything.

Steps of playing Planning Poker game are:

Before starting the game the product owner ensures that he has a list of all features to be estimated.

Step-1: Form a group of no more than 10 estimators and the Scrum Product Owner. The Scrum Product Owner can participate, but cannot be an estimator.

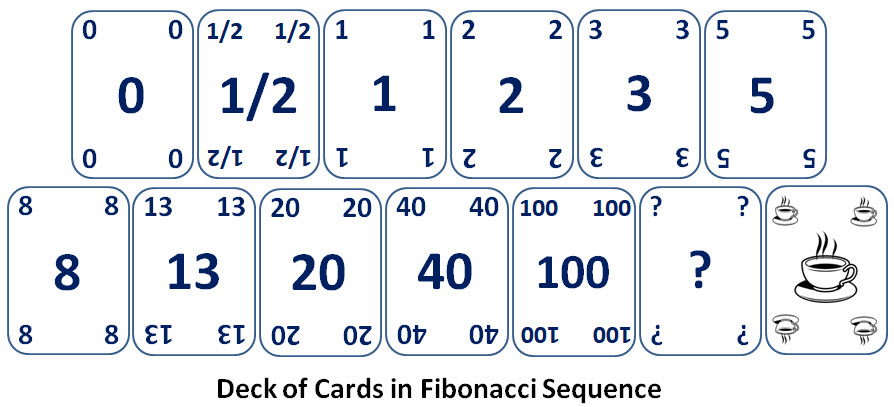

Step-2: Each estimator gets a deck of cards with values similar to the Fibonacci sequence (i.e., 0, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, …) or any other progression like 0, 1, 2, 3, 5, 8, 13, 20, 40, and 100.

Note: The Fibonacci sequence is recommended because the numbers in the sequence reflect that uncertainty grows proportionally with the size of the story.

Step-3: The Scrum Product Owner reads the description of the user story or theme to the estimators. The estimators ask questions and the Scrum Product Owner explains in more detail.

If many stories have to be estimated, then a time-limit of say only one minute can be set for the explanation.

If the time-limit has reached and the estimators do not understand the story it is a sign that the story has to be written again.

Step-4: Every estimator privately selects an estimate card, representing value as per the number of story points, effort days, or any other pre-decided units as per his judgement on estimation.

Note: A high estimate value usually means that the story is not well understood or should be broken down into multiple smaller stories.

Step-5: He then places the card face down on the table. After everyone has chosen a card, the cards are flipped over to reveal the selections.

Step-6: If the estimates vary widely, the owners of the high and low estimates discuss the reasons why their estimates are so different. All estimators should participate in the discussion.

Step-7: Repeat from step 4 until the estimates converge and some consensus is found or by applying rules like use the median value or use the highest score to limit the number of poker rounds.

Step-8: This game is repeated until all stories are estimated.

Such games ensure a reliable estimate of the effort needed to complete product backlog items requested by the product owner and help improve collective knowledge of what has to be done.

<<<<<< =================== >>>>>>

Q. 113: What are the test basis in Agile projects?

1) User stories

2) Experience from previous projects.

3) Existing functions, features, and quality characteristics of the system.

4) Code, architecture, and design.

5) User profiles like context, system configurations, and user behaviour.

6) Information on defects from existing and previous projects.

7) A categorization of defects in a defect taxonomy.

8) Applicable standards like DO-178B for avionics software

9) User documentation

10) Quality risks documentation

<<<<<< =================== >>>>>>

Q. 114: For being testable, which topics are covered by the acceptance criteria covers?

1) Functional behaviour:

It means externally observable behaviour with user actions as input operating under certain configurations.

2) Quality characteristics:

How the system performs the specified behaviour. The characteristics may also be referred to as quality attributes or non-functional requirements. Common quality characteristics are performance, reliability, usability, etc.

3) Scenarios (use cases):

A sequence of actions between a user and the system, to accomplish a specific goal or business task.

4) Business rules:

Activities that can only be performed in the system under certain conditions defined by outside procedures and constraints (e.g. procedures used by an insurance company to handle insurance claims).

5) External interfaces:

Descriptions of the connections between the system to be developed and the outside world. External interfaces can be divided into different types (user interface, interface to other systems, etc.).

<<<<<< =================== >>>>>>

Q. 115: What important information testers need during an iteration?

1) User stories

2) Acceptance criteria associated with user stories

3) Information on how the system is supposed to work and be used

4) Information on the system interfaces that can be used/accessed to test the system

5) Information on whether current tool support is sufficient

6) Information on whether enough knowledge and skill is available to perform the necessary tests

Above information is important in deciding whether a particular activity can be considered done or not.

<<<<<< =================== >>>>>>

Q. 116: Describe an example of a user story and its Acceptance Criteria (DoD)?

User story:

As a customer, I want to be able to open a popup window that shows the last 30 transactions on my account, with backward/forward arrows allowing me to scroll through transaction history so that I can see my transaction history without closing the “enter payment amount” window.

Acceptance Criteria (DoD):

1) Initially populated with 30 most-recent transactions; if no transactions, display “No transaction history yet”

2) Backward scrolls back 10 transactions; forward scrolls forward 10 transactions

3) Transaction data retrieved only for current account

4) Displays within 2 seconds of pressing “show transaction history” control

5) Backward/forward arrows at the bottom

6) Conforms to corporate UI standard

7) Can minimize or close pop-up through standard controls at upper right

8) Properly opens in all supported browsers

<<<<<< =================== >>>>>>

Q. 117: Describe an example of Definition of Done (DoD) for Unit testing?

Unit testing is considered complete if compliance with the following is ensured;

1) 100% decision coverage where possible, with careful reviews of any infeasible paths

2) Static analysis performed on all code

3) No unresolved major defects (ranked based on priority and severity)

4) No known unacceptable technical debt remaining in the design and the code

5) All code, unit tests, and unit test results reviewed

6) All unit tests automated

7) Important characteristics are within agreed limits (e.g., performance)

<<<<<< =================== >>>>>>

Q. 118: Describe an example of Definition of Done (DoD) for Integration testing?

Integration testing is considered complete if compliance with the following is ensured;

1) All functional requirements tested, including both positive and negative tests, with the number of tests based on size, complexity, and risks

2) All interfaces between units tested

3) All quality risks covered according to the agreed extent of testing

4) No unresolved major defects (prioritized according to risk and importance)

5) All defects found are reported

6) All regression tests automated, where possible, with all automated tests stored in a common repository

<<<<<< =================== >>>>>>

Q. 119: Describe an example of Definition of Done (DoD) for System testing?

System testing is considered complete if compliance with the following is ensured;

1) End-to-end tests of user stories, features, and functions

2) All user personas covered

3) The most important quality characteristics of the system covered (e.g., performance, robustness, reliability)

4) Testing done in a production-like environment(s), including all hardware and software for all supported configurations, to the extent possible

5) All quality risks covered according to the agreed extent of testing

6) All regression tests automated, where possible, with all automated tests stored in a common repository

7) All defects found are reported and possibly fixed

8) No unresolved major defects (prioritized according to risk and importance)

<<<<<< =================== >>>>>>

Q. 120: Describe an example of Definition of Done (DoD) for User Stories?

The definition of done for user stories may be found out by the following criteria:

1) The user stories selected for the iteration are complete, understood by the team, and have detailed, testable acceptance criteria

2) All the elements of the user story are specified and reviewed, including the user story acceptance tests, have been completed

3) Tasks necessary to implement and test the selected user stories have been identified and estimated by the team

ISTQB Agile Tester Extension Exam Theory Study Material Part 13

An expert on R&D, Online Training and Publishing. He is M.Tech. (Honours) and is a part of the STG team since inception.