Integration Testing a Key Software Testing activity – Why, Who & How?

After the test progression criteria for units, sub-modules, and modules have been met, it is necessary to verify that these elements work correctly together. The role of integration testing is to conduct such a verification by an orderly progression of testing in which all the individually tested software parts are combined and tested together until the entire system has been integrated.

Let us firstly try to gather the answers of Why, How & Who related to Integration Tests

Q. 1: Why do we need to carry out Integration Testing?

The goal of integration testing is to detect defects that occur on the interfaces of the interacting elements: units, sub-modules, modules, and multi-module components. This type of testing verifies the formats of messages sent between services, the types and the number of parameters passed between interacting elements of code, and whether these elements are consistent with respect to their expected mutual functionalities. Depending on the nature

of the test cases, integration testing may additionally expose functionality, security, and usability defects.

Q. 2: Who should carry out Integration Testing?

Developers perform most of the integration testing. Testers conduct integration tests of multiple modules and multi-module components.

Q. 3: How should we do the Integration Testing?

Integration testing should be performed in an iterative and incremental manner. The gradual process facilitates early isolation of possible interface inconsistencies. All module interfaces should be progressively exercised and the integrated elements should be tested for both valid and invalid inputs. Other units that are part of the integrated system should invoke callable interfaces made available by an integrated code segment. Where multiple units invoke a unit under an integration test, integration testing should cover calls from at least two different calling units.

Integration test cases are built by extending the existing module tests so they span the multiple modules and multi-module components until the entire system is included. By extending the unit, sub-module, and module test cases to span the entire system, a test suite is established that records and tracks the results at every checkpoint that was previously verified, and instantly notifies the team when a code modification introduces a problem into any part of the system.

An alternative way, recently made possible by new technology, is to exercise a set of integrated elements with realistic use cases, and then have unit test cases generated automatically. The results of these tests should be then manually verified.

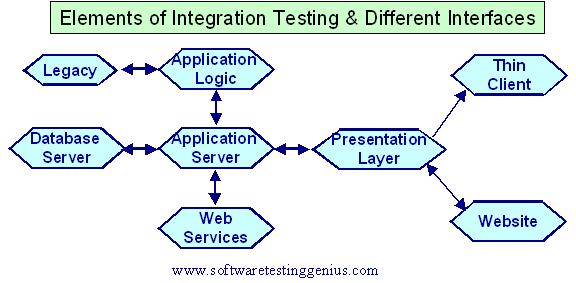

To be effective, integration testing should be applied to each element of the integrated application, as exemplified in the figure above.

Integration tests should be repeatable so that they can be used to verify the system after it is modified in current and/or future development iterations. Even if integration tests require partial or full manual testing, they can be made repeatable if they are recorded carefully. This can be done either by documenting each step in detail or by using an automated test record/playback tool.

Some of the additional questions that come to the mind are:

How to do the measurement of Integration Tests?

Integration tests are black box tests. Therefore, measures applied to black box testing apply to integration tests. A more specific measure, which can be tracked during integration testing, is the number of passed and failed service, component, and module interaction tests. These are the tests that verify the proper parameters passed and the calling sequences between the interacting modules. The interface coverage should be measured and used as a test progression criterion. At the module level, interface coverage for the test progression to the next integration level or to the acceptance testing should be 100%.

How to Track the Integration Tests?

Integration tests and their pass and failure rates should be tracked as a subset of black box tests. Interface coverage should be tracked and used to determine progression to the next testing step.

How do we automate the Integration Tests?

Using record / playback technology, the developer or tester can exercise the application functionality targeted for testing while the tool automatically designs test cases with real data that represents the paths taken through the application. In such situations, no coding or scripting is required. The result is a library of test cases against which new code can be tested to ensure it meets specifications and does not break existing functionality.

Advantages of using technology to automate integration testing:

The following are the key advantages of using technology to automate integration testing:

1) Fast and Easy Generation of Low-Maintenance Test Suite.

Developers, testers, or QA staff members can automatically create realistic unit test cases by simply exercising the application.

2) Automated Creation of a Thorough Regression Test Suite.

Developers can verify new code functionality as soon as it is completed, and then the team can run the generated test cases periodically to determine whether new or modified code impacted previously verified functionality.

3) Ability to Test a Full Range of Application Functionality on a Single Machine Independent of a Complex Testing Environment.

For applications that contain database interactions or calls to other external data sources, processes, services, etc., automated tools generate test cases that represent those behaviors and develop test case stubs as needed. These test cases can be used to test complex application behavior from a single machine (which may be a developer�s or tester�s desktop) without requiring live data connections or a fully staged test environment.

Many More Articles on Software Testing Approaches

An expert on R&D, Online Training and Publishing. He is M.Tech. (Honours) and is a part of the STG team since inception.